A quick look at UK petitions data in Python

More gentle exploration of python. This time some lines of code for grabbing Parliament petition data.

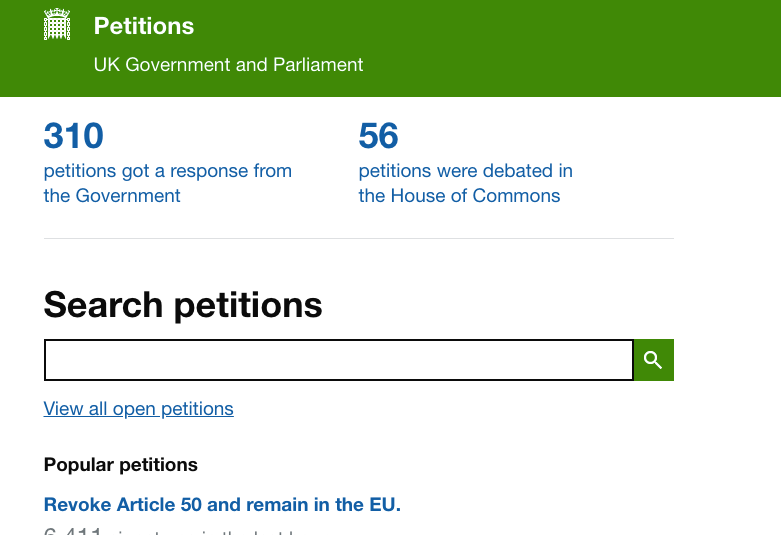

Last week I walked my MA students through a gentle introduction to coding. This week, I wanted to build on that with a real world example of how code can become a tool for repeating and automating tasks. I decided to look at data from the UK government's Petitions website.

The site has been in the news a lot recently with the Revoke Article 50 petition in particular, a bit of a talking point. So I wanted to show how we could use some simple Python (built on the basic principles) to grab and analyse data from the site.

So I set out to do the following:

- Explore how to grab data from the Petitions web site

- Explore exploring the data

- Do some quick analysis of the data to show where the signatures for the petition came from and which constituencies had most signatories. Both of these have been some cause for debate in the last week.

I didn't want to start with huge amounts of data so I picked another petition: "People found with a knife to get 10 years and using a knife 25 years in prison". All the petitions have a link to the JSON data on the petition page, but you can `get any petition data in JSON format by simply adding .json to the end of the URL.

So the code below shows the results for the knife crime petition at the URL

https://petition.parliament.uk/petitions/233926.json

If you wanted to look at the article50 petition you'll need to use

https://petition.parliament.uk/petitions/241584.json

Here's the code for ranking by country (for the knife crime petition)

import requests

import pandas as pd

response = requests.get('https://petition.parliament.uk/petitions/233926.json')

petition_data = response.json()

country_data = petition_data['data']['attributes']['signatures_by_country']

country_df = pd.DataFrame(country_data)

country_rank = country_df.sort_values(by=['signature_count'], ascending=False)

print(country_rank)And here's the code for ranking constituency for the same petition:

import requests

import pandas as pd

response = requests.get('https://petition.parliament.uk/petitions/233926.json')

petition_data = response.json()

constituency_data = petition_data['data']['attributes']['signatures_by_constituency']

constituency_df = pd.DataFrame(constituency_data)

constituency_rank = constituency_df.sort_values(by=['signature_count'], ascending=False)

print(constituency_rank)(the links take you to Repl.it where you can run the code)

Reuse: Analysing other petitions

The great thing about the data from petition site is that the structure is always the same. That was a chance to briefly look at 'navigating' JSON. But, it also means that the only thing we need to change to do the same analysis on another petition is the URL in the response = requests.get(' ') bit of the code.

This underlines the point I wanted to make about reuse. You may not fully understand what all the code is doing here, but it works and you know that you could use it again when the need arose.

There's a more detailed walkthrough of the code in a Google Colab Notebook. If you want to tinker with the code you can load the page and then select File > Open in playground mode. There's also a version on Github.

This isn't meant to be comprenhensive and there's not a huge amount of analysis going on here. But I hope it encourages people to explore and play with a bit of code.